¶ Services

Before starting, ensure you have your cloud workspace IDE or your local environment set up with the required services:

- dbt-bigquery/dbt-snowflake: The dbt engine for working with BigQuery/Snowflake data models and transformation environments.

- Package BigQuery: [Package link]

- Package Snowflake: [Package link]

- Lightdash: The Lightdash CLI for generating metadata for dbt model YAML files.

- Lightdash CLI: [CLI Link]

- DataHub: Used for generating and testing DataCatalog/Governance uploads.

- DataHub CLI: [CLI Link]

- ReData: For generating and testing Data Quality outputs.

- ReData CLI: [CLI Link]

- YAMLLint: To check and fix dbt project YAML file formatting.

- YAMLLint Service: [Service Link]

- SQLFluff: For checking, testing, and fixing dbt project SQL model file formatting.

- SQLFluff Service: [Service Link]

- SQLFluff dbt templater service: [Templater Link]

- GIT: To manage your code states and versioning.

- Git Service: [Service Link]

- Google Cloud SDK: Required if using BigQuery.

- gcloud SDK: [SDK Link]

- SnowFlake: Required if using SnowFlake.

- snowsql SDK: [SDK Link]

- dbt-dry-run: Required for Bigquery dry run.

- Package dbt-dry-run: [Package Link]

¶ Visual Studio Code UI:

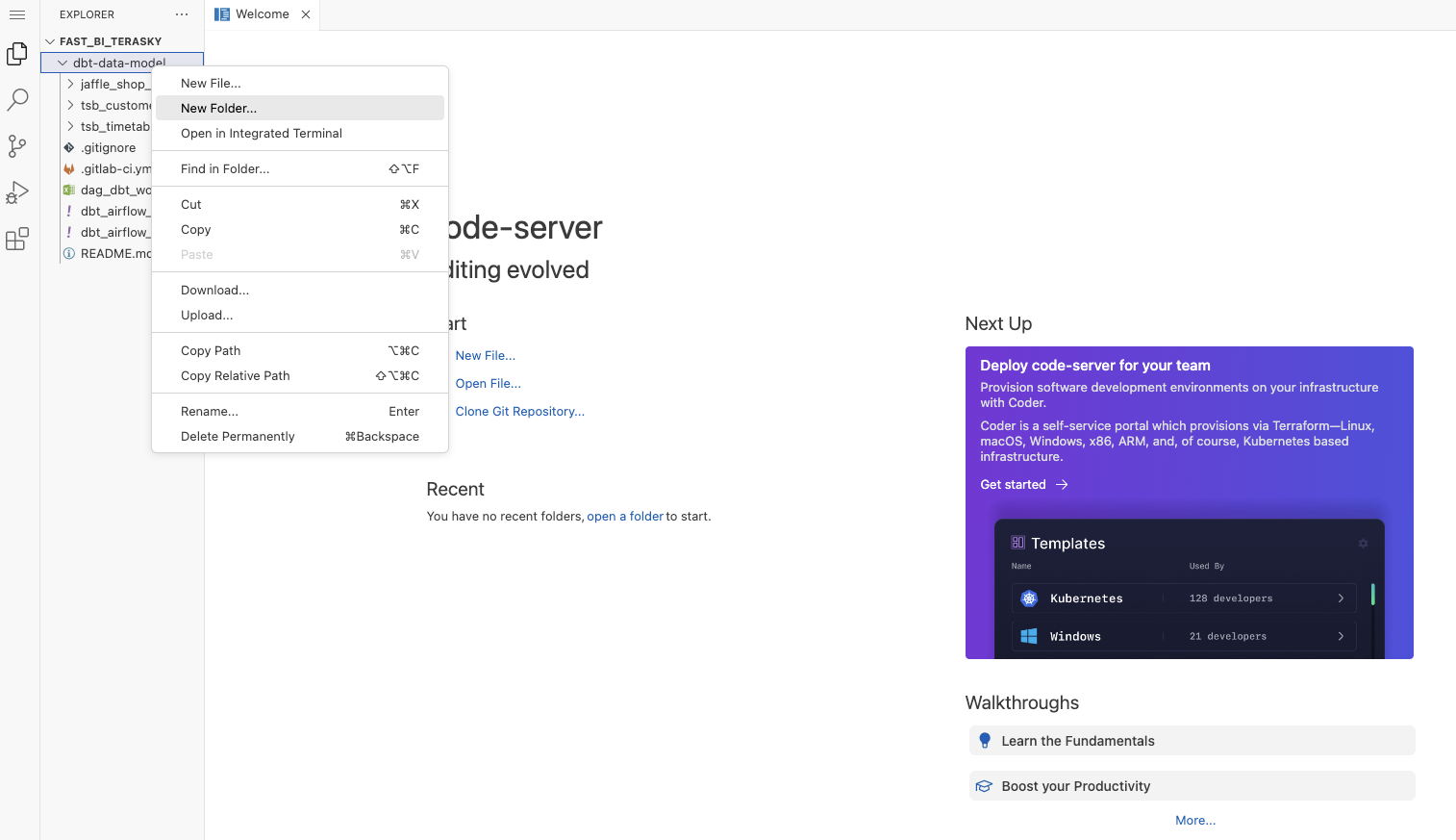

If you have followed the "Visual Studio Code - How to Start" page, you should already have cloned the data model repository and your dbt projects. Let's get started.

Starting with a freshly cloned repository, right-click on the folder named dbt-data-model. In the open window, select "Open in Integrated Terminal."

¶ Before Start - dbt Fundamentals

¶ dbt Fundamentals

Learn the foundational steps of transforming data in dbt. Start by connecting dbt to a data warehouse and Git repository, then explore key concepts like modeling, sources, testing, documentation, and deployment. Get hands-on by building a model and running tests in dbt Cloud. Course duration: approximately 5.0 hours.

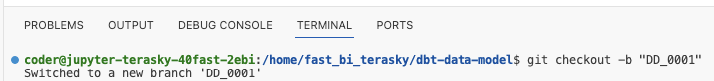

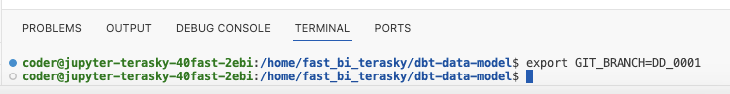

¶ Creating a New Branch

Before making any changes to the main branch of your dbt-data-model repository, let's create your branch. For example, if you're starting a new data model, your branch name could be DD_0001, where DD stands for Data Deployment and 0001 is a unique identifier. It's best to use an alphanumeric format for branch names. Run the following command in the open terminal

- Command: git checkout -b "DD_0001"

You've successfully created a new branch named DD_0001. Now, set an environment variable with the command:

- Command: export GIT_BRANCH=DD_0001

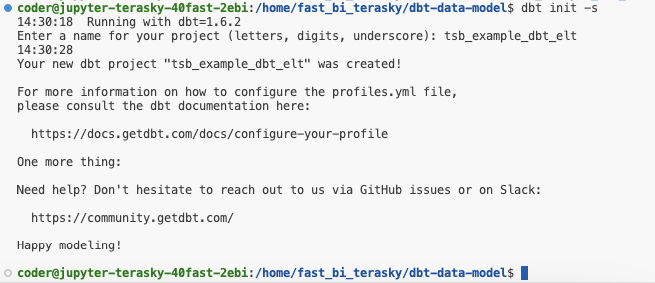

¶ Creating a New DBT Project (Optional - if the user used dbt Project initialization)

If you're starting with a new dbt project, follow these steps to set up the project. Skip this step if you're already familiar with the dbt folder structure.

Initiate a new dbt project with the following command. We will modify this project later. Running this command will create a new folder:

- Command: dbt init -s

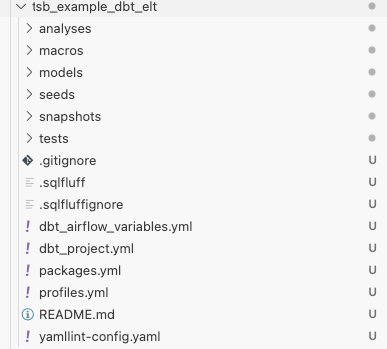

Provide your new dbt project name; for example: tsb_example_dbt_elt.

Your new dbt project is currently empty. To prepare it for running dbt transformations in the Fast.BI platform, ensure your dbt project folder contains all the necessary files listed here:

- analyses - [ Link ]

- macros - [ Link ]

- models - [ Link ]

- seeds - [ Link ]

- snapshots - [ Link ]

- tests - [ Link ]

- .gitignore - [ Link ]

- .sqlfluff - [ Link ]

- .sqlfluffignore - [ Link ]

- dbt_project.yml - [ Link ]

- packages.yml - [ Link ]

- profiles.yml - [ Link ]

- README.md - [ Link ] or [ Link ]

- yamllint-config.yaml - [ Link ]

- dbt_airflow_variables.yml - [ Link ]

¶ Configuring the main DBT Project Files (Optional - if the user used dbt Project initialization)

Let's configure the main files to test our first dbt flow.

- profiles.yml - Connection to Data Warehouse example: BigQuery

jaffle_shop_dbt_elt_k8s:

target: sa

outputs:

sa:

type: bigquery

method: oauth

project: fast-bi-analytics

dataset: prod

priority: batch

threads: 35

location: europe-central2

job_execution_timeout_seconds: 3600

job_retries: 1

impersonate_service_account: dbt-sa@fast-bi-terasky.iam.gserviceaccount.com

test:

type: bigquery

method: oauth

project: fast-bi-analytics

dataset: "e2e_test_{{ modules.re.sub('[^a-zA-Z0-9]', '_', env_var('GIT_BRANCH').lower()) }}"

priority: batch

threads: 35

location: europe-central2

job_execution_timeout_seconds: 3600

job_retries: 1

impersonate_service_account: dbt-sa@fast-bi-terasky.iam.gserviceaccount.com

dev:

type: bigquery

method: oauth

project: fast-bi-analytics

dataset: "dev_test_{{ modules.re.sub('[^a-zA-Z0-9]', '_', env_var('GIT_BRANCH').lower()) }}"

location: europe-central2

threads: 35

job_execution_timeout_seconds: 300

- dbt_project.yml - Verify that the profile name matches the name in profile.yml line 1.

name: 'jaffle_shop_dbt_elt_k8s'

config-version: 2

version: '1.0.0'

profile: 'jaffle_shop_dbt_elt_k8s'

model-paths: ["models"]

seed-paths: ["seeds"]

test-paths: ["tests"]

analysis-paths: ["analyses"]

macro-paths: ["macros"]

target-path: "target"

clean-targets:

- "target"

- "dbt_modules"

- "logs"

- "dbt_packages"

models:

jaffle_shop_dbt_elt_k8s:

jaffle_shop:

+schema: jaffle_shop_data

materialized: table

+re_data_monitored: true

+re_data_owners: ['data-bi-team']

staging:

+schema: jaffle_shop_data

materialized: view

+re_data_monitored: true

+re_data_owners: ['data-bi-team']

tsb:

+schema: jaffle_shop_data

materialized: table

+re_data_monitored: true

+re_data_owners: ['data-bi-team']

re_data:

enable: true

+schema: jaffle_shop_data

internal:

+schema: jaffle_shop_data

seeds:

+schema: jaffle_shop_data

+re_data_monitored: false

+re_data_owners: ['data-bi-team']

snapshots:

+schema: jaffle_shop_data_k8s

+re_data_monitored: false

+re_data_owners: ['data-bi-team']

vars:

execution_date: '{{ dbt_airflow_macros.ds(timezone=none) }}'

data_interval_start: '{{ data_interval_start }}'

data_interval_end: '{{ data_interval_end }}'

- packages.yml - Configure the packages.

packages:

- package: yu-iskw/dbt_airflow_macros

version: 0.3.0

- package: re-data/re_data

version: [">=0.10.0", "<0.12.0"]

- dbt_airflow_variables.yml - Necessary for running a dbt pipeline flow in the Fast.BI platform.

K8S_SECRETS_DBT_PRJ_JAFFLE_SHOP_DBT_ELT_K8S:

PLATFORM: 'Airflow'

NAMESPACE: 'data-orchestration'

OPERATOR: 'k8s'

POD_NAME: 'dbt-k8s'

GIT_URL: 'https://gitlab.fast.bi/'

DAG_ID: 'k8s_operator_jaffle_shop_dbt_elt_k8s'

DAG_SCHEDULE_INTERVAL: '@once'

DAG_TAG: ['gitlab', 'k8s_operator_dbt', 'argo']

PROJECT_ID: 'fast-bi-analytics'

PROJECT_LEVEL: 'NON_PROD'

IMAGE: 'europe-central2-docker.pkg.dev/fast-bi-common/bi-platform/tsb-dbt-core:v1.9.1'

DBT_PROJECT_NAME: 'jaffle_shop_dbt_elt_k8s'

DBT_PROJECT_DIRECTORY: 'jaffle_shop_dbt_elt_k8s'

MANIFEST_NAME: 'jaffle_shop_dbt_elt_k8s_manifest'

DBT_SEED: 'True'

DBT_SEED_SHARDING: 'True'

DBT_SOURCE: 'True'

DBT_SOURCE_SHARDING: 'True'

DBT_SNAPSHOT: 'True'

DBT_SNAPSHOT_SHARDING: 'True'

DEBUG: 'False'

MODEL_DEBUG_LOG: 'False'

DATA_QUALITY: 'True'

DATAHUB_ENABLED: 'True'

DATA_ANALYSIS_PROJECT: '2444b5a8-642b-49b4-bb35-636882b8668d'

DAG_OWNER: "fast.bi analytics"

¶ Running the first DBT Project commands

Now, you're ready to run your first dbt project commands to compile and test it.

- Command: dbt deps. - [ Link ] Run the following command to install project dependencies:

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ dbt deps

08:40:24 Running with dbt=1.9.2

08:40:25 Installing yu-iskw/dbt_airflow_macros

08:40:25 Installed from version 0.3.0

08:40:25 Up to date!

08:40:25 Installing re-data/re_data

08:40:25 Installed from version 0.11.0

08:40:25 Up to date!

08:40:26 Installing dbt-labs/dbt_utils

08:40:26 Installed from version 1.1.1

08:40:26 Updated version available: 1.3.0

08:40:26

08:40:26 Updates available for packages: ['dbt-labs/dbt_utils']

Update your versions in packages.yml, then run dbt deps

- Command: dbt debug --target dev. - [ Link ] Run the following command to check your connectivity to your Data Warehouse.

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ export GIT_BRANCH=DD_250422_H33BX

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ dbt debug --target dev

08:42:31 Running with dbt=1.9.2

08:42:31 dbt version: 1.9.2

08:42:31 python version: 3.11.11

08:42:31 python path: /usr/bin/python3.11

08:42:31 os info: Linux-6.6.72+-x86_64-with-glibc2.31

08:42:34 Using profiles dir at /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s

08:42:34 Using profiles.yml file at /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/profiles.yml

08:42:34 Using dbt_project.yml file at /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/dbt_project.yml

08:42:34 adapter type: bigquery

08:42:34 adapter version: 1.9.1

08:42:35 Configuration:

08:42:35 profiles.yml file [OK found and valid]

08:42:35 dbt_project.yml file [OK found and valid]

08:42:35 Required dependencies:

08:42:35 - git [OK found]

08:42:35 Connection:

08:42:35 method: oauth

08:42:35 database: fast-bi-analytics

08:42:35 execution_project: fast-bi-analytics

08:42:35 schema: dev_test_dd_250422_h33bx

08:42:35 location: europe-central2

08:42:35 priority: None

08:42:35 maximum_bytes_billed: None

08:42:35 impersonate_service_account: None

08:42:35 job_retry_deadline_seconds: None

08:42:35 job_retries: 1

08:42:35 job_creation_timeout_seconds: None

08:42:35 job_execution_timeout_seconds: 300

08:42:35 timeout_seconds: 300

08:42:35 client_id: None

08:42:35 token_uri: None

08:42:35 dataproc_region: None

08:42:35 dataproc_cluster_name: None

08:42:35 gcs_bucket: None

08:42:35 dataproc_batch: None

08:42:35 Registered adapter: bigquery=1.9.1

08:42:37 Connection test: [OK connection ok]

Note: If you encounter an error at this stage, it's likely related to your access rights on the Google Cloud Platform. Make sure you have the necessary access rights, particularly BigQuery Service access rights, to resolve any access-related issues.

- Command: dbt parse --target dev. - [ Link ] Run the following command to parse and validate the contents of your dbt project.

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ dbt parse --target dev

08:44:00 Running with dbt=1.9.2

08:44:02 Registered adapter: bigquery=1.9.1

08:44:02 Unable to do partial parsing because of a version mismatch

08:44:05 Performance info: /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/target/perf_info.json

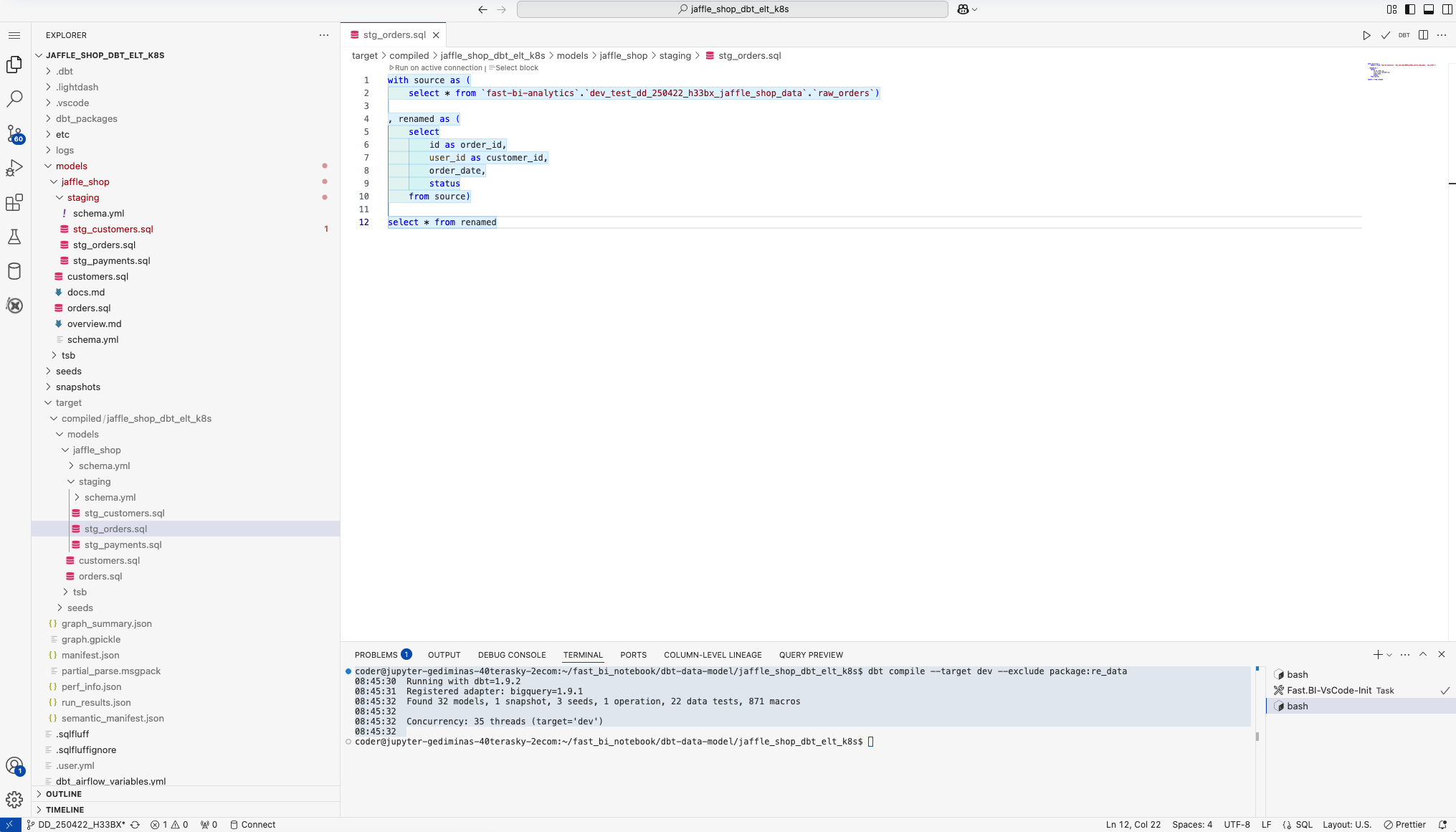

- Command: dbt compile --target dev --exclude package:re_data. [ Link ] Run the following command to compile your dbt project.

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ dbt compile --target dev --exclude package:re_data

08:45:30 Running with dbt=1.9.2

08:45:31 Registered adapter: bigquery=1.9.1

08:45:32 Found 32 models, 1 snapshot, 3 seeds, 1 operation, 22 data tests, 871 macros

08:45:32

08:45:32 Concurrency: 35 threads (target='dev')

08:45:32 Note: In the command, we use the '--exclude package:re_data' flag. This is necessary because we do not have the re_data (Data Quality) initialization in this step. It will be automatically added by Fast.BI platform automation when we merge our new project into the master branch.

- Command: dbt-dry-run --project-dir . --profiles-dir . --target dev --skip-not-compiled. [ Link] Finally, check your compiled models in SQL Format and perform a dry run on your Data Warehouse. The Command is optional.

Note: After running the 'dbt compile' command, a new folder named 'target' is created. Inside this folder, you can find all your compiled model files in SQL. The 'dbt-dry-run' command tests these compiled SQL statements in your Data Warehouse to verify their execution.

With these commands, you can compile, validate, and dry-run your dbt project, ensuring that everything is set up correctly before you proceed with further development and testing.

¶ Creating the first DBT Project data

Now, you're ready to create the first DataMart with the dbt Labs data transformation engine.

- Command: dbt build --target dev --exclude package:re_data [ Link ], Create DataMarts using the dbt Labs engine.

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ dbt build --target dev --exclude package:re_data

09:04:00 Running with dbt=1.9.2

09:04:01 Registered adapter: bigquery=1.9.1

09:04:01 Unable to do partial parsing because profile has changed

09:04:04 Found 34 models, 1 snapshot, 3 seeds, 1 operation, 26 data tests, 871 macros

09:04:04

09:04:04 Concurrency: 35 threads (target='dev')

09:04:04

09:04:10 1 of 38 START sql table model dev_test_dd_250422_h33bx.my_first_dbt_model ...... [RUN]

09:04:10 2 of 38 START sql table model dev_test_dd_250422_h33bx_jaffle_shop_data.tsb_random [RUN]

09:04:10 3 of 38 START seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_customers [RUN]

09:04:10 4 of 38 START seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_orders ... [RUN]

09:04:10 5 of 38 START seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_payments . [RUN]

09:04:12 2 of 38 OK created sql table model dev_test_dd_250422_h33bx_jaffle_shop_data.tsb_random [CREATE TABLE (10.0 rows, 0 processed) in 1.52s]

09:04:12 1 of 38 OK created sql table model dev_test_dd_250422_h33bx.my_first_dbt_model . [CREATE TABLE (2.0 rows, 0 processed) in 1.84s]

09:04:12 6 of 38 START test not_null_my_first_dbt_model_id .............................. [RUN]

09:04:12 7 of 38 START test unique_my_first_dbt_model_id ................................ [RUN]

09:04:12 7 of 38 PASS unique_my_first_dbt_model_id ...................................... [PASS in 0.65s]

09:04:13 6 of 38 FAIL 1 not_null_my_first_dbt_model_id .................................. [FAIL 1 in 0.66s]

09:04:13 8 of 38 SKIP relation dev_test_dd_250422_h33bx.my_second_dbt_model ............. [SKIP]

09:04:13 9 of 38 SKIP test not_null_my_second_dbt_model_id .............................. [SKIP]

09:04:13 10 of 38 SKIP test unique_my_second_dbt_model_id ............................... [SKIP]

09:04:13 3 of 38 OK loaded seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_customers [INSERT 100 in 3.09s]

09:04:13 4 of 38 OK loaded seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_orders [INSERT 99 in 3.09s]

09:04:13 11 of 38 START sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_customers [RUN]

09:04:13 12 of 38 START test accepted_values_raw_orders_status__completed__returned__return_pending__shipped__placed [RUN]

09:04:13 13 of 38 START test not_null_raw_orders_user_id ................................ [RUN]

09:04:13 11 of 38 OK created sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_customers [CREATE VIEW (0 processed) in 0.39s]

09:04:14 14 of 38 START test not_null_stg_customers_customer_id ......................... [RUN]

09:04:14 15 of 38 START test unique_stg_customers_customer_id ........................... [RUN]

09:04:14 12 of 38 PASS accepted_values_raw_orders_status__completed__returned__return_pending__shipped__placed [PASS in 0.56s]

09:04:14 13 of 38 PASS not_null_raw_orders_user_id ...................................... [PASS in 0.57s]

09:04:14 16 of 38 START sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_orders [RUN]

09:04:14 5 of 38 OK loaded seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_payments [INSERT 113 in 3.86s]

09:04:14 17 of 38 START sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_payments [RUN]

09:04:14 16 of 38 OK created sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_orders [CREATE VIEW (0 processed) in 0.29s]

09:04:14 18 of 38 START test accepted_values_stg_orders_status__placed .................. [RUN]

09:04:14 19 of 38 START test not_null_stg_orders_order_id ............................... [RUN]

09:04:14 20 of 38 START test unique_stg_orders_order_id ................................. [RUN]

09:04:14 17 of 38 OK created sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_payments [CREATE VIEW (0 processed) in 0.36s]

09:04:14 21 of 38 START test accepted_values_stg_payments_payment_method__credit_card__coupon__bank_transfer__gift_card [RUN]

09:04:14 22 of 38 START test not_null_stg_payments_payment_id ........................... [RUN]

09:04:14 23 of 38 START test unique_stg_payments_payment_id ............................. [RUN]

09:04:14 15 of 38 PASS unique_stg_customers_customer_id ................................. [PASS in 0.75s]

09:04:14 14 of 38 PASS not_null_stg_customers_customer_id ............................... [PASS in 0.76s]

09:04:15 18 of 38 WARN 4 accepted_values_stg_orders_status__placed ...................... [WARN 4 in 0.76s]

09:04:15 20 of 38 PASS unique_stg_orders_order_id ....................................... [PASS in 0.80s]

09:04:15 19 of 38 PASS not_null_stg_orders_order_id ..................................... [PASS in 0.82s]

09:04:15 22 of 38 PASS not_null_stg_payments_payment_id ................................. [PASS in 0.69s]

09:04:15 21 of 38 PASS accepted_values_stg_payments_payment_method__credit_card__coupon__bank_transfer__gift_card [PASS in 0.76s]

09:04:15 23 of 38 PASS unique_stg_payments_payment_id ................................... [PASS in 0.81s]

09:04:15 24 of 38 START sql table model dev_test_dd_250422_h33bx_jaffle_shop_data.customers [RUN]

09:04:15 25 of 38 START sql incremental model dev_test_dd_250422_h33bx_jaffle_shop_data.orders [RUN]

09:04:17 24 of 38 OK created sql table model dev_test_dd_250422_h33bx_jaffle_shop_data.customers [CREATE TABLE (62.0 rows, 6.0 KiB processed) in 1.87s]

09:04:17 26 of 38 START test not_null_customers_customer_id ............................. [RUN]

09:04:17 27 of 38 START test unique_customers_customer_id ............................... [RUN]

09:04:17 26 of 38 PASS not_null_customers_customer_id ................................... [PASS in 0.47s]

09:04:17 27 of 38 PASS unique_customers_customer_id ..................................... [PASS in 0.49s]

09:04:18 25 of 38 OK created sql incremental model dev_test_dd_250422_h33bx_jaffle_shop_data.orders [CREATE TABLE (99.0 rows, 6.5 KiB processed) in 2.94s]

09:04:18 28 of 38 START test accepted_values_orders_status__placed__return_pending__returned [RUN]

09:04:18 29 of 38 START test not_null_orders_amount ..................................... [RUN]

09:04:18 30 of 38 START test not_null_orders_bank_transfer_amount ....................... [RUN]

09:04:18 31 of 38 START test not_null_orders_coupon_amount .............................. [RUN]

09:04:18 32 of 38 START test not_null_orders_credit_card_amount ......................... [RUN]

09:04:18 33 of 38 START test not_null_orders_customer_id ................................ [RUN]

09:04:18 34 of 38 START test not_null_orders_gift_card_amount ........................... [RUN]

09:04:18 35 of 38 START test not_null_orders_order_id ................................... [RUN]

09:04:18 36 of 38 START test relationships_orders_customer_id__customer_id__ref_customers_ [RUN]

09:04:18 37 of 38 START test unique_orders_order_id ..................................... [RUN]

09:04:19 33 of 38 PASS not_null_orders_customer_id ...................................... [PASS in 0.58s]

09:04:19 35 of 38 PASS not_null_orders_order_id ......................................... [PASS in 0.60s]

09:04:19 30 of 38 PASS not_null_orders_bank_transfer_amount ............................. [PASS in 0.63s]

09:04:19 32 of 38 PASS not_null_orders_credit_card_amount ............................... [PASS in 0.64s]

09:04:19 29 of 38 PASS not_null_orders_amount ........................................... [PASS in 0.67s]

09:04:19 34 of 38 PASS not_null_orders_gift_card_amount ................................. [PASS in 0.67s]

09:04:19 31 of 38 PASS not_null_orders_coupon_amount .................................... [PASS in 0.70s]

09:04:19 37 of 38 PASS unique_orders_order_id ........................................... [PASS in 0.68s]

09:04:19 28 of 38 WARN 2 accepted_values_orders_status__placed__return_pending__returned [WARN 2 in 0.76s]

09:04:19 38 of 38 START snapshot jaffle_shop_snapshot_k8s.snsh_orders ................... [RUN]

09:04:19 36 of 38 PASS relationships_orders_customer_id__customer_id__ref_customers_ .... [PASS in 0.77s]

09:04:22 Data type of snapshot table timestamp columns (TIMESTAMP) doesn't match derived column 'updated_at' (DATE). Please update snapshot config 'updated_at'.

09:04:23 38 of 38 OK snapshotted jaffle_shop_snapshot_k8s.snsh_orders ................... [MERGE (0.0 rows, 12.0 KiB processed) in 4.24s]

09:04:23

09:04:23 1 of 1 START hook: re_data.on-run-end.0 ........................................ [RUN]

09:04:23 1 of 1 OK hook: re_data.on-run-end.0 ........................................... [OK in 0.01s]

09:04:23

09:04:23 Finished running 1 incremental model, 1 project hook, 3 seeds, 1 snapshot, 3 table models, 26 data tests, 4 view models in 0 hours 0 minutes and 18.79 seconds (18.79s).

09:04:23

09:04:23 Completed with 1 error, 0 partial successes, and 2 warnings:

09:04:23

09:04:23 Failure in test not_null_my_first_dbt_model_id (models/example/schema.yml)

09:04:23 Got 1 result, configured to fail if != 0

09:04:23

09:04:23 compiled code at target/compiled/jaffle_shop_dbt_elt_k8s/models/example/schema.yml/not_null_my_first_dbt_model_id.sql

09:04:23

09:04:23 Warning in test accepted_values_stg_orders_status__placed (models/jaffle_shop/staging/schema.yml)

09:04:23 Got 4 results, configured to warn if != 0

09:04:23

09:04:23 compiled code at target/compiled/jaffle_shop_dbt_elt_k8s/models/jaffle_shop/staging/schema.yml/accepted_values_stg_orders_status__placed.sql

09:04:23

09:04:23 Warning in test accepted_values_orders_status__placed__return_pending__returned (models/jaffle_shop/schema.yml)

09:04:23 Got 2 results, configured to warn if != 0

09:04:23

09:04:23 compiled code at target/compiled/jaffle_shop_dbt_elt_k8s/models/jaffle_shop/schema.yml/accepted_values_orders_status__placed__return_pending__returned.sql

09:04:23

09:04:23 Done. PASS=33 WARN=2 ERROR=1 SKIP=3 TOTAL=39

Note: If you encounter failures during this step, don't be concerned. As mentioned before, when you ran the 'dbt compile' command, it only generated your queries but didn't execute them. Similarly, 'dbt-dry-run' only tests whether the queries can run on the Data Warehouse without physically executing them. Only when you extract data will you see the real results. Since you're using the '--target dev' flag, you won't affect any production data. In this case, you may discover that one of the tests fails due to issues with null values, which you may not want to have in your DataMart.

- If you encounter issues or errors in your model, take the necessary steps to address them. Once you've fixed the issues, you can re-run the same command:

dbt build --target dev --exclude package:re_data

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ dbt build --target dev --exclude package:re_data

09:06:09 Running with dbt=1.9.2

09:06:10 Registered adapter: bigquery=1.9.1

09:06:10 Unable to do partial parsing because config vars, config profile, or config target have changed

09:06:10 Unable to do partial parsing because profile has changed

09:06:14 Found 34 models, 1 snapshot, 3 seeds, 1 operation, 26 data tests, 871 macros

09:06:14

09:06:14 Concurrency: 35 threads (target='dev')

09:06:14

09:06:16 1 of 38 START sql table model dev_test_dd_250422_h33bx.my_first_dbt_model ...... [RUN]

09:06:16 2 of 38 START sql table model dev_test_dd_250422_h33bx_jaffle_shop_data.tsb_random [RUN]

09:06:16 3 of 38 START seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_customers [RUN]

09:06:16 4 of 38 START seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_orders ... [RUN]

09:06:16 5 of 38 START seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_payments . [RUN]

09:06:18 2 of 38 OK created sql table model dev_test_dd_250422_h33bx_jaffle_shop_data.tsb_random [CREATE TABLE (10.0 rows, 0 processed) in 2.00s]

09:06:18 1 of 38 OK created sql table model dev_test_dd_250422_h33bx.my_first_dbt_model . [CREATE TABLE (1.0 rows, 0 processed) in 2.01s]

09:06:18 6 of 38 START test not_null_my_first_dbt_model_id .............................. [RUN]

09:06:18 7 of 38 START test unique_my_first_dbt_model_id ................................ [RUN]

09:06:18 6 of 38 PASS not_null_my_first_dbt_model_id .................................... [PASS in 0.45s]

09:06:18 7 of 38 PASS unique_my_first_dbt_model_id ...................................... [PASS in 0.50s]

09:06:18 8 of 38 START sql view model dev_test_dd_250422_h33bx.my_second_dbt_model ...... [RUN]

09:06:18 5 of 38 OK loaded seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_payments [INSERT 113 in 2.80s]

09:06:18 9 of 38 START sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_payments [RUN]

09:06:18 8 of 38 OK created sql view model dev_test_dd_250422_h33bx.my_second_dbt_model . [CREATE VIEW (0 processed) in 0.33s]

09:06:18 10 of 38 START test not_null_my_second_dbt_model_id ............................ [RUN]

09:06:18 11 of 38 START test unique_my_second_dbt_model_id .............................. [RUN]

09:06:19 9 of 38 OK created sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_payments [CREATE VIEW (0 processed) in 0.32s]

09:06:19 12 of 38 START test accepted_values_stg_payments_payment_method__credit_card__coupon__bank_transfer__gift_card [RUN]

09:06:19 13 of 38 START test not_null_stg_payments_payment_id ........................... [RUN]

09:06:19 14 of 38 START test unique_stg_payments_payment_id ............................. [RUN]

09:06:19 4 of 38 OK loaded seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_orders [INSERT 99 in 3.21s]

09:06:19 15 of 38 START test accepted_values_raw_orders_status__completed__returned__return_pending__shipped__placed [RUN]

09:06:19 16 of 38 START test not_null_raw_orders_user_id ................................ [RUN]

09:06:19 3 of 38 OK loaded seed file dev_test_dd_250422_h33bx_jaffle_shop_data.raw_customers [INSERT 100 in 3.32s]

09:06:19 17 of 38 START sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_customers [RUN]

09:06:19 10 of 38 PASS not_null_my_second_dbt_model_id .................................. [PASS in 0.73s]

09:06:19 11 of 38 PASS unique_my_second_dbt_model_id .................................... [PASS in 0.75s]

09:06:19 17 of 38 OK created sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_customers [CREATE VIEW (0 processed) in 0.32s]

09:06:19 18 of 38 START test not_null_stg_customers_customer_id ......................... [RUN]

09:06:19 19 of 38 START test unique_stg_customers_customer_id ........................... [RUN]

09:06:19 16 of 38 PASS not_null_raw_orders_user_id ...................................... [PASS in 0.43s]

09:06:19 15 of 38 PASS accepted_values_raw_orders_status__completed__returned__return_pending__shipped__placed [PASS in 0.55s]

09:06:19 20 of 38 START sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_orders [RUN]

09:06:19 13 of 38 PASS not_null_stg_payments_payment_id ................................. [PASS in 0.74s]

09:06:19 12 of 38 PASS accepted_values_stg_payments_payment_method__credit_card__coupon__bank_transfer__gift_card [PASS in 0.74s]

09:06:19 14 of 38 PASS unique_stg_payments_payment_id ................................... [PASS in 0.77s]

09:06:20 20 of 38 OK created sql view model dev_test_dd_250422_h33bx_jaffle_shop_data.stg_orders [CREATE VIEW (0 processed) in 0.35s]

09:06:20 21 of 38 START test accepted_values_stg_orders_status__placed .................. [RUN]

09:06:20 22 of 38 START test not_null_stg_orders_order_id ............................... [RUN]

09:06:20 23 of 38 START test unique_stg_orders_order_id ................................. [RUN]

09:06:20 18 of 38 PASS not_null_stg_customers_customer_id ............................... [PASS in 0.68s]

09:06:20 19 of 38 PASS unique_stg_customers_customer_id ................................. [PASS in 0.73s]

09:06:20 21 of 38 WARN 4 accepted_values_stg_orders_status__placed ...................... [WARN 4 in 0.73s]

09:06:20 22 of 38 PASS not_null_stg_orders_order_id ..................................... [PASS in 0.73s]

09:06:20 23 of 38 PASS unique_stg_orders_order_id ....................................... [PASS in 0.74s]

09:06:20 24 of 38 START sql table model dev_test_dd_250422_h33bx_jaffle_shop_data.customers [RUN]

09:06:20 25 of 38 START sql incremental model dev_test_dd_250422_h33bx_jaffle_shop_data.orders [RUN]

09:06:22 24 of 38 OK created sql table model dev_test_dd_250422_h33bx_jaffle_shop_data.customers [CREATE TABLE (62.0 rows, 6.0 KiB processed) in 2.02s]

09:06:22 26 of 38 START test not_null_customers_customer_id ............................. [RUN]

09:06:22 27 of 38 START test unique_customers_customer_id ............................... [RUN]

09:06:23 27 of 38 PASS unique_customers_customer_id ..................................... [PASS in 0.55s]

09:06:23 26 of 38 PASS not_null_customers_customer_id ................................... [PASS in 0.56s]

09:06:26 25 of 38 OK created sql incremental model dev_test_dd_250422_h33bx_jaffle_shop_data.orders [SCRIPT (21.7 KiB processed) in 5.77s]

09:06:26 28 of 38 START test accepted_values_orders_status__placed__return_pending__returned [RUN]

09:06:26 29 of 38 START test not_null_orders_amount ..................................... [RUN]

09:06:26 30 of 38 START test not_null_orders_bank_transfer_amount ....................... [RUN]

09:06:26 31 of 38 START test not_null_orders_coupon_amount .............................. [RUN]

09:06:26 32 of 38 START test not_null_orders_credit_card_amount ......................... [RUN]

09:06:26 33 of 38 START test not_null_orders_customer_id ................................ [RUN]

09:06:26 34 of 38 START test not_null_orders_gift_card_amount ........................... [RUN]

09:06:26 35 of 38 START test not_null_orders_order_id ................................... [RUN]

09:06:26 36 of 38 START test relationships_orders_customer_id__customer_id__ref_customers_ [RUN]

09:06:26 37 of 38 START test unique_orders_order_id ..................................... [RUN]

09:06:27 32 of 38 PASS not_null_orders_credit_card_amount ............................... [PASS in 0.61s]

09:06:27 34 of 38 PASS not_null_orders_gift_card_amount ................................. [PASS in 0.64s]

09:06:27 35 of 38 PASS not_null_orders_order_id ......................................... [PASS in 0.64s]

09:06:27 37 of 38 PASS unique_orders_order_id ........................................... [PASS in 0.65s]

09:06:27 29 of 38 PASS not_null_orders_amount ........................................... [PASS in 0.67s]

09:06:27 31 of 38 PASS not_null_orders_coupon_amount .................................... [PASS in 0.68s]

09:06:27 33 of 38 PASS not_null_orders_customer_id ...................................... [PASS in 0.76s]

09:06:27 30 of 38 PASS not_null_orders_bank_transfer_amount ............................. [PASS in 0.77s]

09:06:27 28 of 38 WARN 2 accepted_values_orders_status__placed__return_pending__returned [WARN 2 in 0.83s]

09:06:27 38 of 38 START snapshot jaffle_shop_snapshot_k8s.snsh_orders ................... [RUN]

09:06:27 36 of 38 PASS relationships_orders_customer_id__customer_id__ref_customers_ .... [PASS in 0.92s]

09:06:30 Data type of snapshot table timestamp columns (TIMESTAMP) doesn't match derived column 'updated_at' (DATE). Please update snapshot config 'updated_at'.

09:06:31 38 of 38 OK snapshotted jaffle_shop_snapshot_k8s.snsh_orders ................... [MERGE (0.0 rows, 12.0 KiB processed) in 4.21s]

09:06:31

09:06:31 1 of 1 START hook: re_data.on-run-end.0 ........................................ [RUN]

09:06:31 1 of 1 OK hook: re_data.on-run-end.0 ........................................... [OK in 0.01s]

09:06:31

09:06:31 Finished running 1 incremental model, 1 project hook, 3 seeds, 1 snapshot, 3 table models, 26 data tests, 4 view models in 0 hours 0 minutes and 17.71 seconds (17.71s).

09:06:32

09:06:32 Completed with 2 warnings:

09:06:32

09:06:32 Warning in test accepted_values_stg_orders_status__placed (models/jaffle_shop/staging/schema.yml)

09:06:32 Got 4 results, configured to warn if != 0

09:06:32

09:06:32 compiled code at target/compiled/jaffle_shop_dbt_elt_k8s/models/jaffle_shop/staging/schema.yml/accepted_values_stg_orders_status__placed.sql

09:06:32

09:06:32 Warning in test accepted_values_orders_status__placed__return_pending__returned (models/jaffle_shop/schema.yml)

09:06:32 Got 2 results, configured to warn if != 0

09:06:32

09:06:32 compiled code at target/compiled/jaffle_shop_dbt_elt_k8s/models/jaffle_shop/schema.yml/accepted_values_orders_status__placed__return_pending__returned.sql

09:06:32

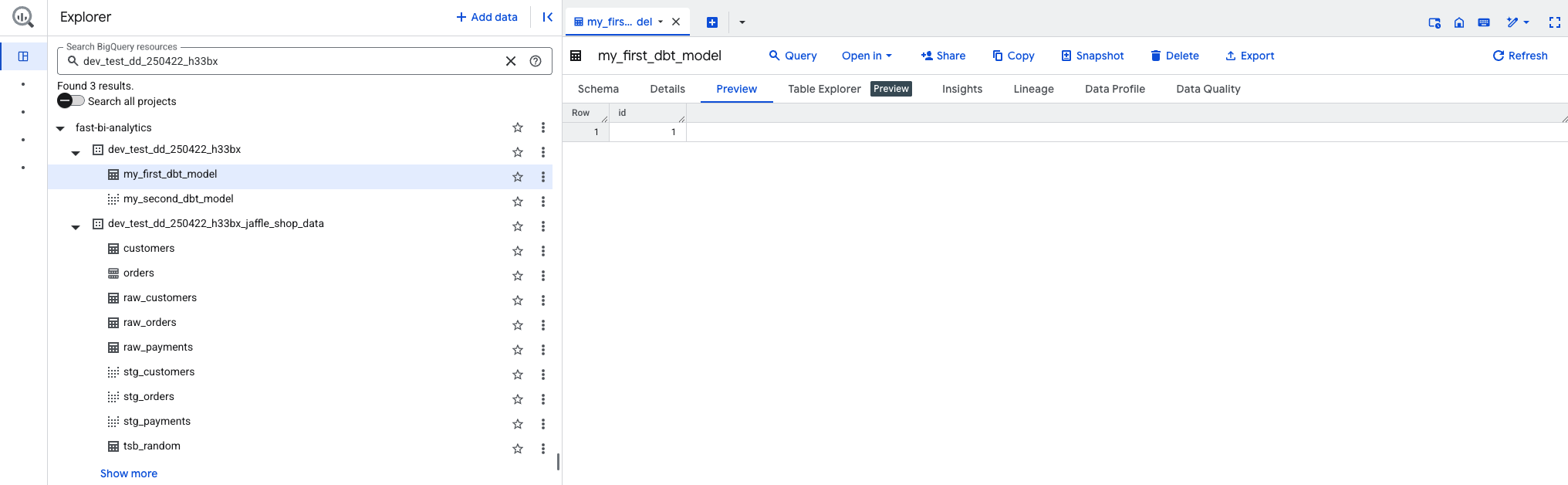

09:06:32 Done. PASS=37 WARN=2 ERROR=0 SKIP=0 TOTAL=39This time, with the issues resolved, your models should work correctly. Continue refining your DataMart until you achieve the desired results.

Now you can back to the main page, click on the BigQuery icon, and you will find your extracted data in the Data Warehouse environment.

¶

Generating Metadata Columns

Now, you're ready to generate a metadata configuration for your models.

- Command: lightdash validate --profiles-dir .lightdash --exclude package:re_data --target dev --verbose. [ Link ] To Validate your dbt models, their metadata configuration compatibility with LightDash BI Visualization service.

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ lightdash validate \

--profiles-dir .lightdash --exclude package:re_data --target dev --verbose

> dbt version 1.9.2

> Compiling with project dir /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s

> Loading dbt_project.yml file from: /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s

> dbt target directory: target

> Using profiles dir /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/.lightdash and profile jaffle_shop_dbt_elt_k8s

> Using target bigquery

> Running: dbt ls --project-dir . --profiles-dir .lightdash --exclude package:re_data --target dev --output json --output-keys unique_id --quiet

> Models: model.jaffle_shop_dbt_elt_k8s.customers model.jaffle_shop_dbt_elt_k8s.my_first_dbt_model model.jaffle_shop_dbt_elt_k8s.my_second_dbt_model \

model.jaffle_shop_dbt_elt_k8s.orders model.jaffle_shop_dbt_elt_k8s.stg_customers model.jaffle_shop_dbt_elt_k8s.stg_orders \

model.jaffle_shop_dbt_elt_k8s.stg_payments model.jaffle_shop_dbt_elt_k8s.tsb_random

> Loading dbt manifest from /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/target/manifest.json

Getting joined models for tsb_random

Getting joined models for orders

Getting joined models for customers

Getting joined models for stg_orders

Getting joined models for stg_payments

Getting joined models for stg_customers

Getting joined models for my_second_dbt_model

Getting joined models for my_first_dbt_model

> Loading dbt manifest from /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/target/manifest.json

> Validating 8 models from dbt manifest

> Validating models using dbt manifest version v12

> Valid compiled models (8): tsb_random, orders, customers, stg_orders, stg_payments, stg_customers, my_second_dbt_model, my_first_dbt_model

> Fetching warehouse catalog

> Converting explores with adapter: bigquery

> Loading lightdash project config from /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s

No lightdash.config.yml found in /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/lightdash.config.yml

> Loaded lightdash project config

- SUCCESS> tsb_random

- SUCCESS> orders

- SUCCESS> customers

- SUCCESS> stg_orders

- SUCCESS> stg_payments

- SUCCESS> stg_customers

- SUCCESS> my_second_dbt_model

- SUCCESS> my_first_dbt_model

Compiled 8 explores, SUCCESS=8 ERRORS=0

> Compiled 8 explores

No project specified, select a project to validate using --project <projectUuid> or create a preview environment using lightdash \

start-preview or configure your default project using lightdash config set-projectNote: Ignore the last message, "No project specified, select a project to validate using --project <projectUuid> or create a preview environment using lightdash start-preview or configure your default project using lightdash config set." Fast will create the LightDash BI Visualization project.BI platform automation when the new dbt project is merged into the master branch.

- Command: lightdash generate --profiles-dir .lightdash --exclude package:re_data --target dev --verbose. [ Link ] To generate dbt model metadata. Follow the generation wizard, if needed. Type 'Y' to generate metadata for all models when the wizard pops up.

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ lightdash generate --profiles-dir .lightdash --exclude package:re_data --target dev --verbose

> Loading dbt_project.yml file from: /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s

> dbt target directory: target

> Using profiles dir /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/.lightdash and profile jaffle_shop_dbt_elt_k8s

> Using target bigquery

> Loading dbt manifest from /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/target/manifest.json

> Compiled models: 8

Generated .yml files:

✔ tsb_random ➡️ models/tsb/schema.yml

✔ orders ➡️ models/jaffle_shop/schema.yml

✔ customers ➡️ models/jaffle_shop/schema.yml

✔ stg_orders ➡️ models/jaffle_shop/staging/schema.yml

✔ stg_payments ➡️ models/jaffle_shop/staging/schema.yml

✔ stg_customers ➡️ models/jaffle_shop/staging/schema.yml

✔ my_second_dbt_model ➡️ models/example/schema.yml

✔ my_first_dbt_model ➡️ models/example/schema.yml

Done 🕶When metadata generation is complete, you'll find the meta: configuration key in your model schema.

version: 2

models:

- name: my_first_dbt_model

description: "A starter dbt model"

columns:

- name: id

description: "The primary key for this table"

data_tests:

- unique

- not_null

meta:

dimension:

type: number

- name: my_second_dbt_model

description: "A starter dbt model"

columns:

- name: id

description: "The primary key for this table"

data_tests:

- unique

- not_null

meta:

dimension:

type: number

Note: All Lightdash CLI commands may take time, as the workspace cloud IDE has limited resources.

¶ Cleaning - Formatting Your dbt Project Model Files

Now, you're ready to test your dbt project files' format and quality.

- Command: sqlfluff lint --annotation-level failure -p 8 . [ Link ] To check your SQL file format. Before running this command, open the .sqlfluff file and change line 12 from 'target = sa' to 'target = dev'.

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ sqlfluff lint --annotation-level failure -p 8

=== [dbt templater] Sorting Nodes...

=== [dbt templater] Compiling dbt project...

=== [dbt templater] Project Compiled.

WARNING File /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/target/compiled/ \

jaffle_shop_dbt_elt_k8s/models/example/my_first_dbt_model.sql was not found in dbt project

== [/home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/models/jaffle_shop/staging/stg_orders.sql] FAIL

L: 9 | P: 1 | LT04 | Found leading comma ','. Expected only trailing near

| line breaks. [layout.commas]

WARNING File /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/target/compiled/jaffle_shop_dbt_elt_k8s/models/ \

example/my_second_dbt_model.sql was not found in dbt project

WARNING File /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/target/compiled/jaffle_shop_dbt_elt_k8s/models/ \

jaffle_shop/customers.sql was not found in dbt project

== [/home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/models/jaffle_shop/orders.sql] FAIL

L: 12 | P: 1 | LT05 | Line is too long (83 > 80). [layout.long_lines]

L: 17 | P: 1 | LT04 | Found leading comma ','. Expected only trailing near

| line breaks. [layout.commas]

WARNING File /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/target/compiled/jaffle_shop_dbt_elt_k8s/models/tsb/tsb_random.sql \

was not found in dbt project

All Finished 📜 🎉! Note: Ignore warning messages. After completing testing, set 'target = sa' or other production targets as needed.

- Command: dbt clean [ Link ] To clean up your dbt project's temporary files.

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ dbt clean

09:30:45 Running with dbt=1.9.2

09:30:45 Checking /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/target/*

09:30:45 Cleaned /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/target/*

09:30:45 Checking /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/dbt_modules/*

09:30:45 Cleaned /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/dbt_modules/*

09:30:45 Checking /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/logs/*

09:30:45 Cleaned /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/logs/*

09:30:45 Checking /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/dbt_packages/*

09:30:45 Cleaned /home/coder/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s/dbt_packages/*

09:30:45 Finished cleaning all paths.

- Command: yamllint -c yamllint-config.yaml -f colored . [ Link ] To check if dbt project YAML files have a correct format.

coder@jupyter-terasky@fast.bi:~/fast_bi_notebook/dbt-data-model/jaffle_shop_dbt_elt_k8s$ yamllint -c yamllint-config.yaml -f colored .

./models/jaffle_shop/schema.yml

113:24 error too many spaces inside brackets (brackets)

113:63 error too many spaces inside brackets (brackets)Note: An empty output means your dbt project *.yml files have a good format. YAML file format is important for Fast.BI platform automation. If any of the dbt project YAML files have a bad structure, the automation may be stopped, and the merge process could fail.

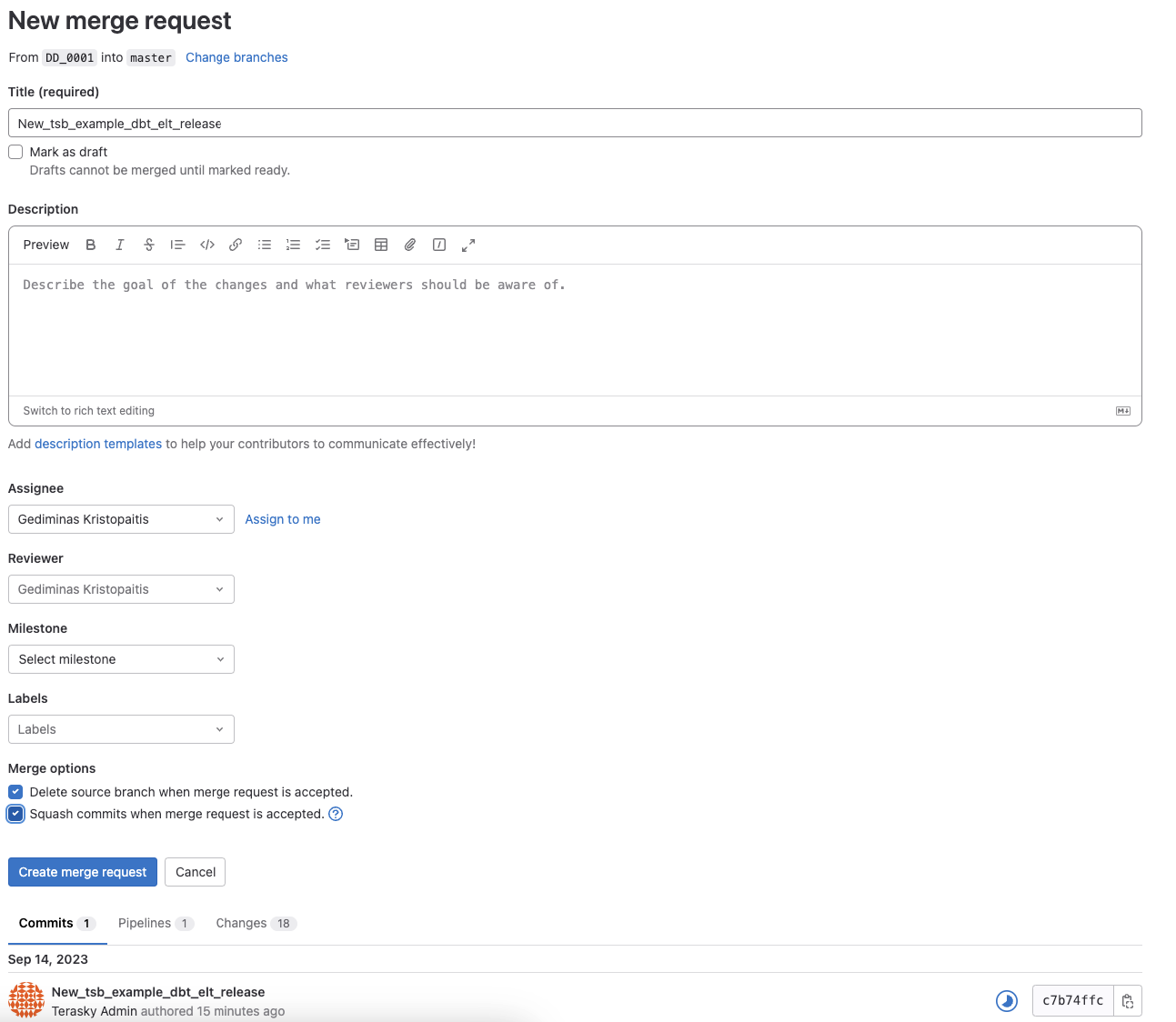

¶ Releasing Your dbt Project

Now, you're ready to release your dbt project to production.

- Command: git config --global user.email "terasky@fast.bi" && git config --global user.name "Terasky Admin". Configure your git identity, Change the email address and user name to yours.

- Command: cd .. && git add -A. Return to the main 'dbt-data-model' folder and add all your newly created files to the branch.

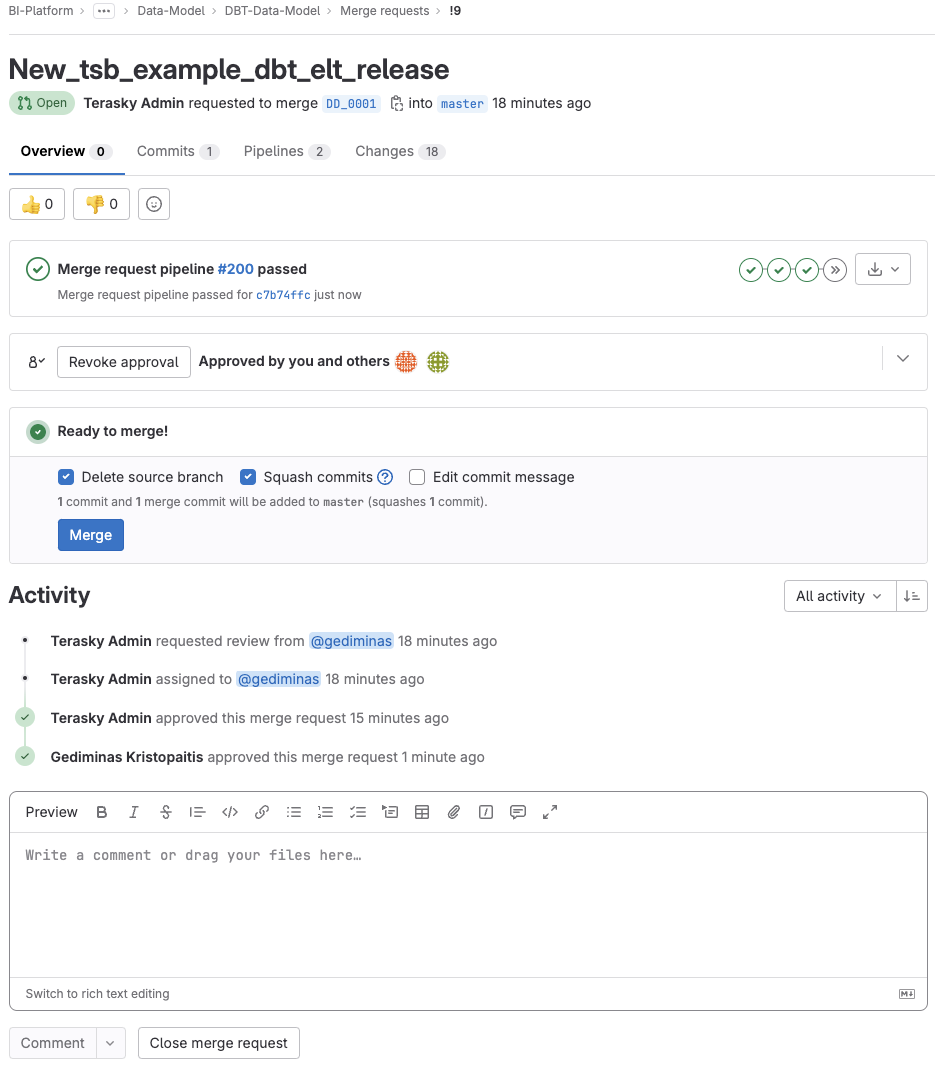

- Command: git commit -am"New_tsb_example_dbt_elt_release". Commit your working branch with the new changes.

- Command: git push --set-upstream origin DD_0001. Push your local branch to the remote repository. Now you can open the main page, click on the GitLab icon, and proceed with your merge request. Alternatively, click the link provided in the command output.

- Select the Assignee/Reviewer.

- Click on "Create merge request."

Wait until Fast.BI CI/CD automation finishes all pipelines and merges to production. Your dbt project is now ready for production use.

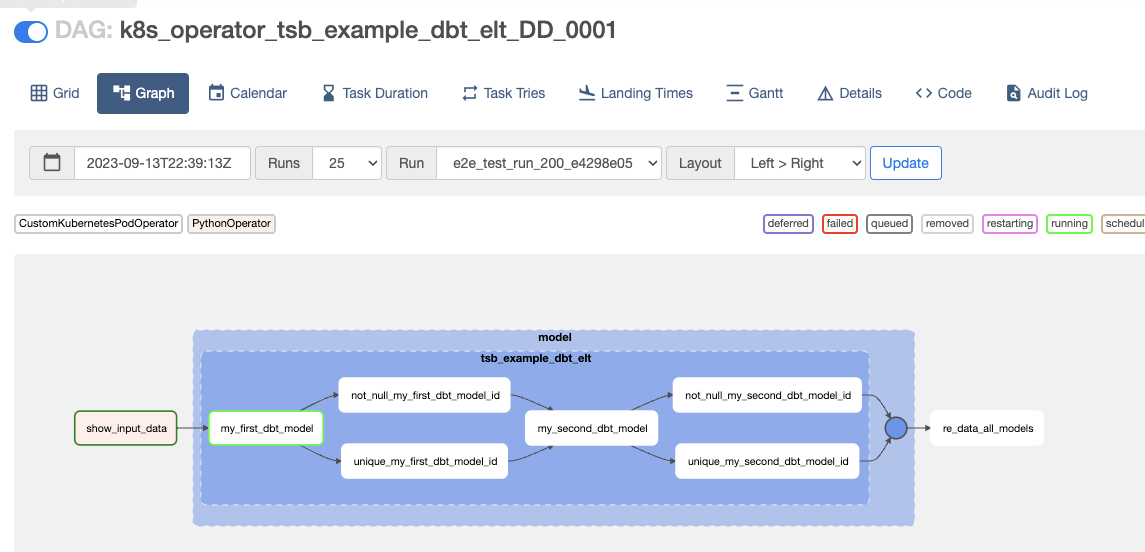

Your new dbt project will undergo a full End-to-End production-ready test. You can find your pipeline flow in Airflow.

Once the merge request has received all necessary approvals and the CI/CD process has successfully finished, you can merge your request into the main branch. Click the "Merge" button.

Congratulations! You have successfully released your first dbt project to production